Voicebot 2.0: Why LMM technology is a “game changer” in voice communication

Voicebot 2.0: Why LMM technology is a “game changer” in voice communication

For years, talking to artificial intelligence was associated with the need for humans to adapt to machines. We had to speak clearly, use simple words, and patiently endure delays in speech processing. The year 2026 puts an end to that. The arrival of Voicebot 2.0 technology, based on LMM (Large Multimodal Models), marks the most significant qualitative leap in telecommunications history. This is not just an evolution – it’s a moment when AI starts to understand not just words, but the entire context of human interaction.

In brief: Key takeaways from the article

- LMM technology allows for real-time conversations with minimal latency, eliminating unnatural pauses.

- Multimodality means that the system understands not only content (text) but also tone of voice, emotions, and subtle sound signals.

- Voicebot 2.0 handles interruptions – a patient or client can interrupt the bot mid-sentence, and it will immediately respond as a human would.

- Machine empathy becomes a reality, allowing for deeper relationships in sectors such as healthcare or debt collection.

- Implementing Voicebot 2.0 is an investment in the highest available customer service standard, significantly increasing customer satisfaction (NPS).

End of robotic sound: What changes with LMM?

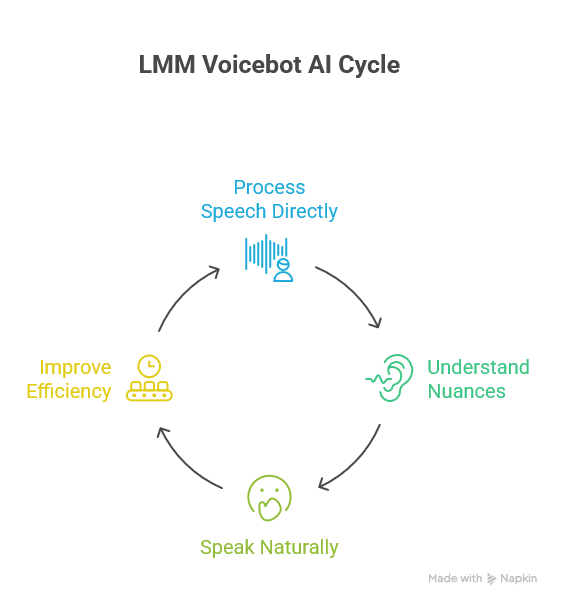

Previous medical voicebots relied on a chain of several separate systems: speech-to-text (ASR), logical analysis, and speech synthesis (TTS). Each of these stages generated delays and the risk of losing context. LMM models are “end-to-end” systems – speech is processed directly by the neural network.

As a result, the next-generation voicebot AI not only speaks naturally but also understands sarcasm, hesitation, or urgency in the caller’s voice. For industries such as logistics or healthcare, where precision matters, this is a fundamental change. A conversation with a virtual consultant stops being a tiresome chore and becomes an efficient dialogue.

Latency and Full-Duplex: A conversation without barriers

One of the biggest problems with generation 1.0 was latency (delay). Using LMM models in EasyCall solutions has reduced response time to below 500ms, a value imperceptible to the human ear.

Moreover, Voicebot 2.0 operates in Full-Duplex mode. This means the system listens and speaks simultaneously. For example, if the bot is giving instructions for a medical examination and the patient says, “Excuse me, could you repeat that?” the bot will not finish its sentence. It will immediately stop, apologize, and repeat the information. This approach is crucial in sectors requiring high trust, where data security in voicebots must go hand in hand with excellent User Experience.

Table: Comparison of Voicebot 1.0 vs. Voicebot 2.0 (LMM)

| Technical feature | Voicebot 1.0 (Market standard) | Voicebot 2.0 (LMM / EasyCall) | Impact on business |

|---|---|---|---|

| Response time (Latency) | 2-4 seconds | < 0.5 seconds | Smooth conversation like with a human |

| Handling interruptions | None (bot must finish speaking) | Active listening (Full-Duplex) | Natural dialogue, no irritation |

| Context understanding | Limited to keywords | Complete (analysis of intent and emotions) | Effectiveness in handling complex issues |

| Speech synthesis (TTS) | Often “metallic,” emotionless | Emotional, adaptive | Building empathy and trust |

| Multilingualism | Requires switching models | Native (conversation in multiple languages) | Service for foreign-language customers |

Practical applications: Where LMM changes the game

The introduction of Voicebot 2.0 is not just a technical novelty; it brings real benefits to daily operations. In healthcare, where reducing missed calls is a priority, LMM enables a much more personalized patient experience. The bot can sense a patient’s anxiety and calm them, something older technologies cannot do. Therefore, this technology is also ideally suited for soft debt collection.

In the commercial sector, where every minute counts, a communication strategy based on LMM allows for advanced sales and lead handling. The bot can argue, refute objections, and conduct negotiations – a skill that was once reserved only for the best contact center agents.

Security and ethics of multimodal models

The power of LMM technology comes with responsibility. At EasyCall, we ensure that every conversation handled by Voicebot 2.0 is fully monitored for security. The speech signal is processed according to the highest standards, as evidenced by our deployments in sensitive areas like private hospital call centers. LMM models are trained to eliminate bias and ensure full transparency in AI decision-making processes.

Summary: Your business in the AI 2.0 era

Voicebot 2.0, based on LMM models, marks the end of the era of “phone automation” and the beginning of the era of digital collaborators. This technology doesn’t just get things done – it builds customer experiences at a level unavailable to earlier solutions. Implementing LMM signals to the market that your company values innovation and respects your customers’ time.

Want to hear the difference between a regular bot and Voicebot 2.0 technology? Our advisors will demonstrate a demo that will change your perception of voice automation. Contact EasyCall and give your company the edge offered by the world’s most advanced artificial intelligence!

FAQ – Voicebot 2.0 and LMM Technology

What exactly differentiates the LMM model from a traditional bot based on ChatGPT?

A traditional language model (LLM) processes text. To interact with it, an additional system for converting speech to text and text to speech is needed. The LMM (Multimodal) model is natively “voice-oriented” – it understands sound directly, eliminating errors in text translation and drastically speeding up responses.

Porozmawiaj z naszym specjalistą

Can Voicebot 2.0 recognize irony or sarcasm from a customer?

Yes, thanks to the analysis of sound parameters (prosody), LMM models can effectively identify sarcasm or irritation, even if the words themselves sound neutral. This helps avoid comical or tactless bot responses in difficult situations.

How does LMM technology handle dialects or unclear speech from elderly people?

Multimodal models are significantly more resistant to noise and unclear pronunciation. They learn speech patterns holistically, allowing them to derive meaning from the context of the conversation, even if some words are spoken unclearly.

Is implementing an LMM-based bot more difficult than standard solutions?

For the client, the process is almost identical. On our side, we handle the configuration of the powerful computational resources required for LMM operation. Thanks to EasyCall’s cloud architecture, a healthcare facility or logistics company gains access to this technology via a simple API.

Can Voicebot 2.0 decide to transfer the conversation to a human?

Yes. The bot continuously monitors the “certainty” of its responses and the level of satisfaction of the interlocutor. If it detects that the issue is too complex or the customer feels discomfort, it smoothly transfers the conversation to a consultant, along with a full summary of the dialogue so far.

Can LMM models speak multiple languages during a single conversation?

This is one of the most impressive features of Voicebot 2.0. If the customer switches from Polish to English during the conversation, the bot will seamlessly start responding in the same language, maintaining the thread of the conversation.

Is LMM technology compliant with the AI Act and EU regulations?

Yes, EasyCall closely monitors the development of EU legislation. Our LMM systems are designed according to “Ethics by Design” principles, ensuring users are informed about interacting with AI and guaranteeing full control over personal data.

What are the technical requirements for an internet connection to maintain such low latency?

Thanks to the optimization of voice transmission protocols (VoIP) in our infrastructure, the requirements are similar to standard IP telephony. Voicebot 2.0 technology is available even with standard business connections, ensuring crystal-clear sound quality.