Data security in public voicebots

Data security in public voicebots

The issue of data security in public voicebots used in offices, banks, or municipal services is extremely important. These tools must ensure the highest level of protection in accordance with GDPR regulations and prevent leaks of data stored in their databases. What security systems are used in them? Discover the main risks and the key types of data processed by public voicebots.

Public voicebots – what are they?

The category of public voicebots includes all tools used by public administration units, including offices, courts, hospitals, NFZ clinics, municipal service helplines, etc. This category also includes voicebots operating bank helplines. Due to the nature of their use, these tools handle sensitive personal data on a daily basis. This makes it crucial to ensure the highest level of data protection in the interest of clients, local residents, or contractors. The entire process should resemble the practices used to maintain data security in call centers.

What data do public voicebots process?

Public voicebots process a range of basic personal data, including sensitive information that enables identification of an individual. These include in particular:

- personal data (first and last name),

- identification data (PESEL number, case number, address),

- contact details (phone number, e-mail address, social media),

- voice data (voice tone as biometric data),

- conversation content (stored as audio recordings or transcripts),

- contextual data (date of the call, history of previous interactions, etc.).

The theft of such data can lead to serious consequences, including identity theft or the use of collected information for other cybercrimes. For this reason, public institutions should perform a thorough analysis and choose only trusted and carefully verified providers of voicebot or chatbot technologies based on artificial intelligence and large datasets.

Personal data security in public voicebots – main threats

Cybercriminals constantly look for vulnerabilities in technology providers’ systems and ways to steal personal data and more. The main threats related to public voicebots include:

- gaining unauthorized access to call recordings or transcripts,

- anonymization errors, where personal data remains in recordings or transcripts,

- interception of data during calls due to lack of encryption,

- voice-spoofing attacks involving impersonation of another person,

- accidental disclosure of sensitive data by employees,

- lack of compliance of the tool with GDPR regulations.

To reduce the risk, it is advisable to use only trusted providers of voice assistant technologies, including EasyCall.

Porozmawiaj z naszym specjalistą

GDPR and voicebots

AI voicebots are subject to GDPR regulations just like any other system processing personal data. Therefore, compliance with the General Data Protection Regulation, in force since 2018, is necessary if:

- conversations with the voicebot contain data that can identify an individual,

- the system records conversations or generates transcripts,

- collected data is later used for other purposes (e.g. training AI models, service quality analysis, preparing interaction history).

In practice, this means that nearly every voicebot implemented in the public sector is subject to GDPR and requires consultation with a specialist. The user must give consent for data processing for the conversation to proceed. Based on our years of experience, we can assist you with this. Exceptions include voicebots operating offline, not storing any data, and allowing full anonymization of the caller.

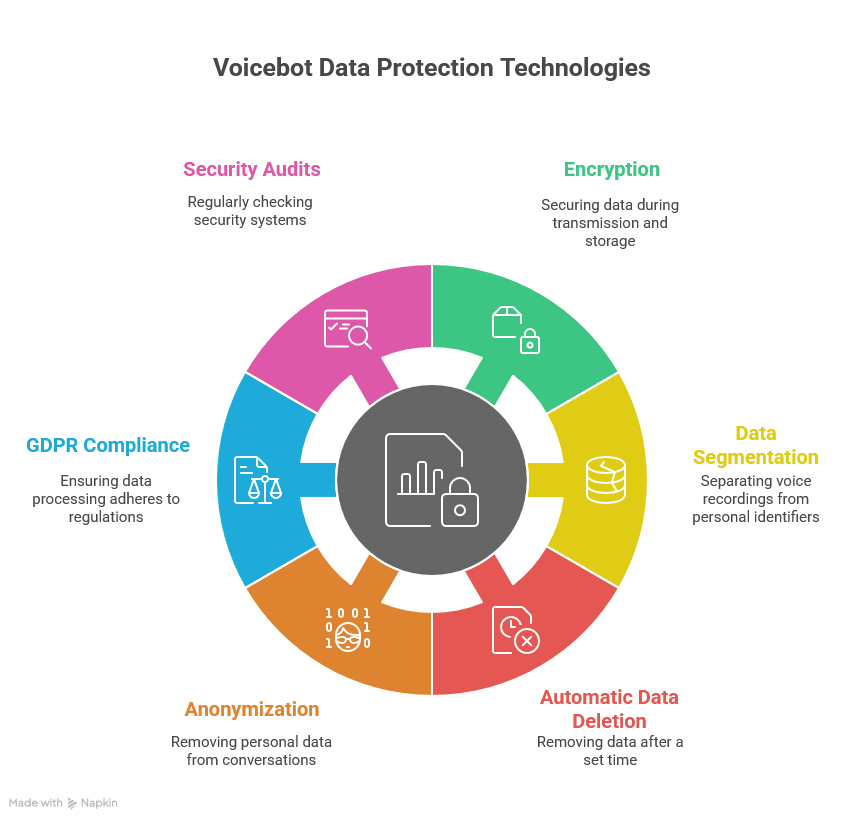

What technologies are used to protect personal data in voicebots?

Providers of public voicebots use various technologies and security systems designed to increase the level of personal data protection. Standard tools and procedures include:

- encryption of transmission and data storage using TLS, AES-256,

- data segmentation, including separating voice recordings from user identifiers or metadata,

- automatic data deletion after a defined period,

- anonymization or pseudonymization of conversations to remove personal data,

- secure data processing in compliance with GDPR, including cloud-based solutions,

- regular security system audits.

How can an institution increase the protection of stakeholders’ data?

Beyond the security measures used by the technology provider, public sector units should introduce additional actions, such as regular employee training for those working with AI. It is also crucial to prepare emergency procedures in case of hacking attacks, system failures, or data breaches. The organization should follow these procedures as part of safety incident management. Additionally, it is necessary to prepare and implement a privacy policy compliant with Article 13 of GDPR. Each organization must also have a data processing agreement with the technology provider, as required by Article 28 of GDPR. Fulfilling all administrative and legal requirements is essential.

Secure AI implementations in government and the public sector

We thoroughly understand the challenges faced by public institutions implementing voicebots in public administration. To address them, we have prepared a dedicated cooperation offer. For years, we have successfully supported the public sector in Poland in its digital transformation and adoption of artificial intelligence. At every stage, we follow principles of ethics and privacy in automated conversations and pay close attention to the security of personal data.

Be sure to explore the stories of our clients from the public sector, including:

- Ministry of Interior and Administration Hospital in Kraków

- County Hospital in Rawicz

- Copernicus Science Centre

Are you considering implementing AI in your office or public sector organization? We encourage you to contact our expert directly. They will thoroughly review your challenges and goals and prepare a tailored cooperation offer.